Assigning Personality to Generative AI

Background

Recently, Microsoft began integrating some of its powerful artificial intelligence (AI) tools into their Office products. Being an early adopter, Lexmark was quick to begin experimenting with Microsoft Copilot, a generative AI chatbot that can be used securely with a company’s own data.

We were fortunate to try some beta features of Copilot, including its ability to go beyond just answering questions about the data and actually complete tasks for users. This was an amazing opportunity to play with some of the most promising helper AI tools coming out.

Approach

As part of the Connected Technology team, I was asked to explore what these features could look like in our internal chatbot. I was immediately inspired by a couple articles I’ve read over the years. The first was about people’s perceived value of operational transparency. In a study, researchers found that when completing complicated tasks, users felt they received more value when they were able to see the work an algorithm was doing when searching options for a flight to purchase.

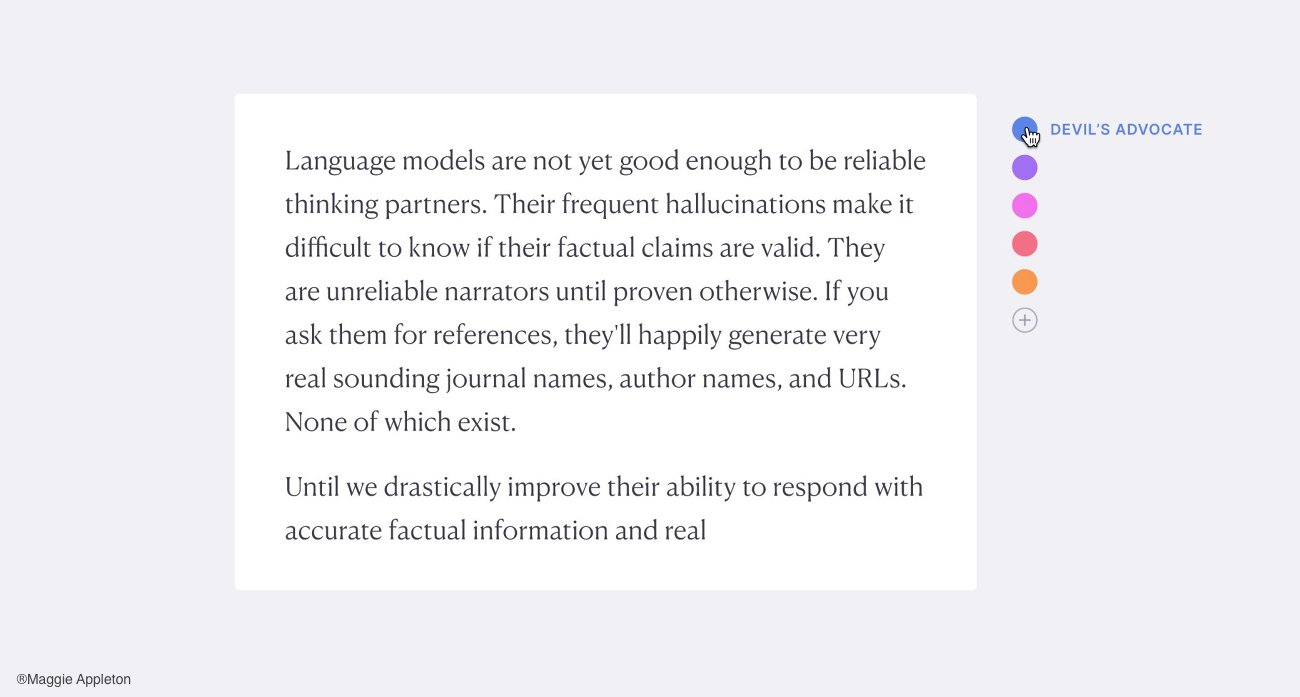

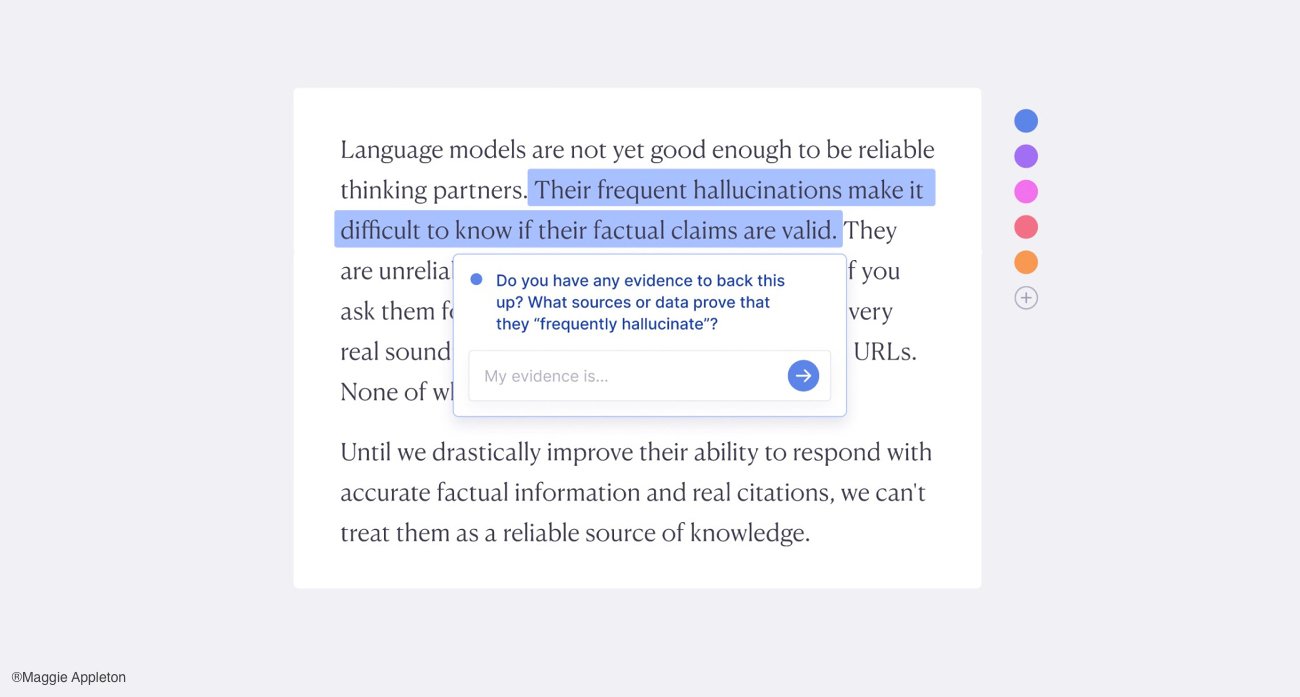

Another source of inspiration was designer Maggie Appleton’s concept of Daemons: the idea that AI could have multiple personalities that interact with a user in order to spur different modes of thinking about a topic.

Humans tend to anthropomorphize everything. We give our cars names, make movies about emotional robots, and we can’t even walk down the street without seeing faces everywhere. This need to apply human characteristics to inanimate objects could play an interesting role in how we design generative AI in the coming years.

So how will we interact with this new technology? Will we be partners achieving goals hand-in-(robot)hand? Or will we relegate AI to being subservient helper bots? Would we even want AI to play a leading role in our lives? Although these questions were beyond the scope of my assignment, they helped me create a framework for how the Copilot features could be presented to users without coming off hokey.

Exploration

I began exploring concepts by asking how would it affect me if AI was an all-knowing, all-powerful helper? Or what if it were just a group of capable, yet dim-witted assistants? Should it present itself as separate entities with varying skills? Ultimately I settled on a familiar metaphor - the odd couple. Two very different personalities that work together to play off their strengths like C3PO and R2D2.

This was a way to represent the different problems AI would be trying to solve. One can answer any question or calculate the probabilities of multiple scenarios, while the other doesn’t even speak but you trust it to fly your star-fighter or deliver your most important messages.

Result

The final concept divided the Copilot feature into 2 separate agents a user would interact with. The main agent would provide answers and guidance to all your questions, while the support agent followed up, asking if it could complete associated tasks for you. After extended use, the AI would would learn and anticipate common questions you and your colleagues have, while taking initiative to do more functions once you’ve become comfortable with its work.

Unfortunately, the priorities of our group changed and we had to put the exploration on hold; however, my next actions would have been to design a set of prototype tests to get quick feedback on the concepts. It would be fairly easy and economical to set up an unmoderated study on a remote testing site like Userzoom or Userlytics to gauge people’s attitudes about trust, perception of competence, and ultimately their preferred experience. Over time the study could be updated to test new Copilot skills and user’s attitudes.